- Sample code

- Example use cases

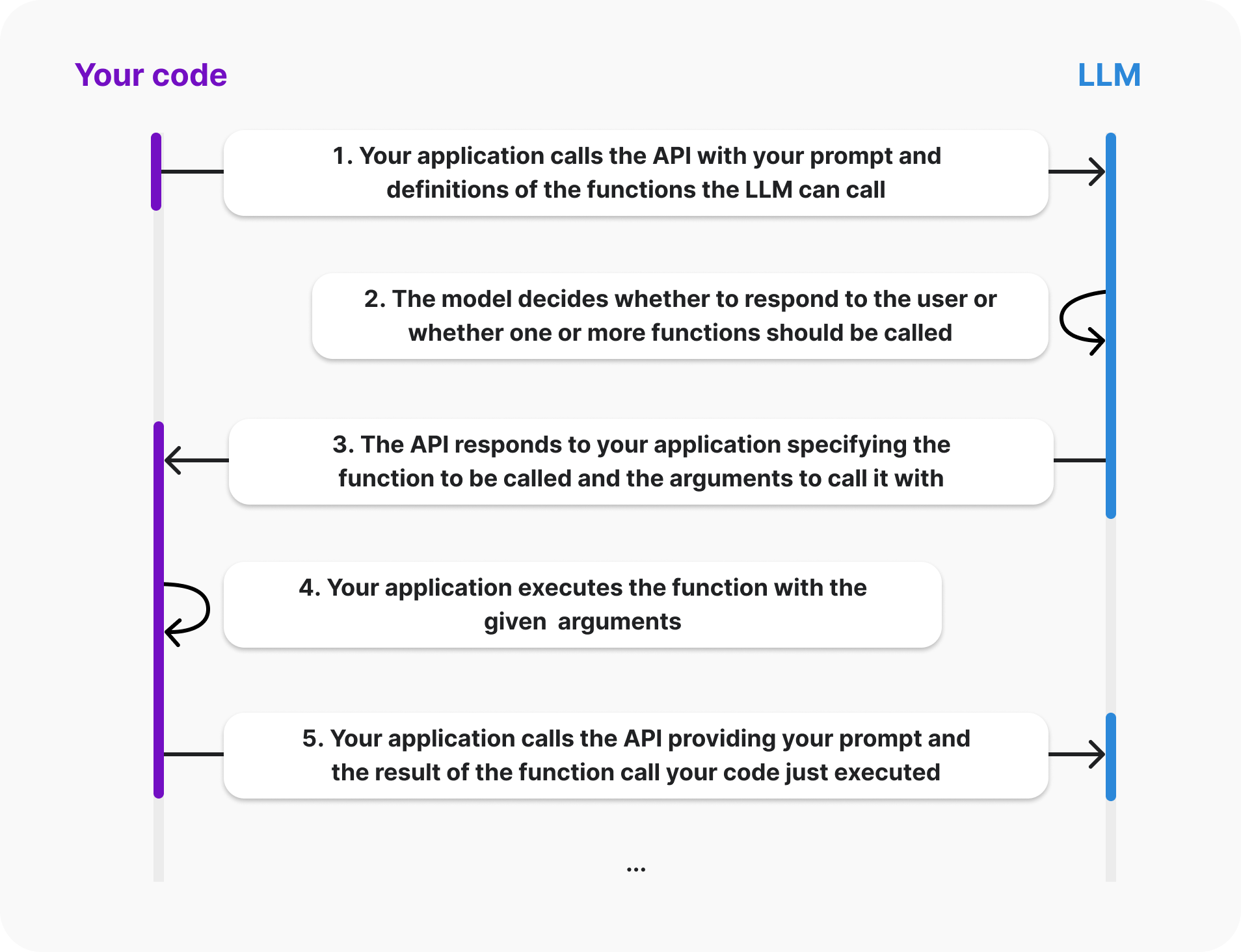

- The lifecycle of a function call

- Function call mechanisms

- Lessons learnt/Challenges faced so far

Function calling allows us to connect models like gpt-4o to external tools and systems. This is useful for many things such as empowering AI assistants with capabilities, or building deep integrations between your applications and the models.

Sample code

Refer Github example(s) for more details:

- Course 1: …

- Course 2: DeepLearning.AI - Functions, Tools and Agents with LangChain

Example use cases

Function calling is useful for a large number of use cases, such as:

- Enabling assistants to fetch data: an AI assistant needs to fetch the latest customer data from an internal system when a user asks “what are my recent orders?” before it can generate the response to the user

- Enabling assistants to take actions: an AI assistant needs to schedule meetings based on user preferences and calendar availability.

- Enabling assistants to perform computation: a math tutor assistant needs to perform a math computation.

- Building rich workflows: a data extraction pipeline that fetches raw text, then converts it to structured data and saves it in a database.

- Modifying your applications’ UI: you can use function calls that update the UI based on user input, for example, rendering a pin on a map.

The lifecycle of a function call

Function call mechanisms

Vanilla OpenAI Function Calls

“Vanilla OpenAI Function Calls” refers to the basic or default implementation of OpenAI’s function-calling mechanism, without additional frameworks or libraries enhancing its capabilities. It involves directly defining and managing function schemas and handling function outputs according to OpenAI’s API documentation, typically using JSON-based schemas.

Key Features of Vanilla OpenAI Function Calls:

- Basic Schema Definition: You define the structure of the input and output using JSON Schema syntax manually.

- Error Handling: Handling errors or validation issues must be coded explicitly.

- Parsing and Validation: Parsing the outputs and ensuring they meet the required schema is typically done using custom or manual logic.

- Flexibility: While flexible, it may require repetitive boilerplate code to manage schema definitions and validation logic.

Example

openai_function = {

"type" : "function",

"function": {

"name" : "plot_some_points",

"description" : "Plots some points using matplotlib!",

"parameters" : {

"type": "object",

"properties": {

"x" : {"type" : "array", "items": {"type": "number"}},

"y" : {"type" : "array", "items": {"type": "number"}}

},

"required": ["x", "y"]

},

"type": "function"

}

}OpenAISchema Powered by Pydantic

When Pydantic is introduced (via the openai-schema package), it builds on the Vanilla OpenAI Function Calls by leveraging Pydantic’s robust data validation and management features. Pydantic is a library that provides easy-to-use, declarative data models in Python.

To learn more about comparison between OpenAPI and OpenAISchema, refer OpenAPI > Section: # OpenAPI Specification vs. OpenAISchema comparison

Key Features:

- Declarative Models: Instead of manually crafting JSON Schemas, you define Python classes using Pydantic, which automatically generates JSON Schemas.

- Example:

from pydantic import BaseModel

class Item(BaseModel):

name: str

price: float- Automatic Validation: Input and output validation are handled automatically, ensuring adherence to the schema without additional manual checks.

- Error Messaging: Clear and structured error messages when validation fails.

- Serialization/Deserialization: Pydantic easily converts between Python objects and JSON, simplifying the handling of API inputs and outputs.

- Integration with OpenAI Function Calls: Pydantic-powered schemas can be seamlessly integrated into OpenAI’s function calls for better maintainability and reduced boilerplate.

Example

class PlotStructure(BaseModel):

x: List[float] = Field(description="X values")

y: List[float] = Field(description="Y values")

@field_validator('y')

def validate_dimensions(cls, y, values):

if 'x' in values.data and len(values.data['x']) != len(y):

raise ValueError("x and y arrays must have same length")

return y

@classmethod

def get_openai_schema(cls, debug=False) -> Dict[str, Any]:

"""Generate the OpenAI function schema from the Pydantic model"""

schema = cls.model_json_schema()

openai_schema = {

"type" : "function",

"function": {

"name" : "plot_some_points",

"description" : "Plots some points using matplotlib!",

"parameters": schema

}

}

if debug:

print(json.dumps(openai_schema, indent=2))

return openai_schema

def plot_some_points(config: PlotStructure) -> None:

"""Create a plot with the given configuration"""

try:

plt.figure(figsize=(8, 6))

plt.plot(config.x, config.y)

plt.grid(True)

plt.show()

except Exception as e:

print(f"Error plotting: {str(e)}")

# Usage

plot_some_points(PlotStructure(x=[1, 2, 3], y=[10, 20, 30]))Comparison

| Feature | Vanilla OpenAI Function Calls | OpenAISchema (Pydantic) |

|---|---|---|

| Schema Definition | Manual JSON Schema | Declarative Python classes using Pydantic |

| Validation | Manual, error-prone | Automatic and robust |

| Error Handling | Requires custom logic | Built-in with clear error messages |

| Ease of Use | More boilerplate | Simplifies schema management and reduces code overhead |

| Flexibility | Fully customizable | Highly customizable but constrained by Pydantic |

| JSON Serialization/Deserialization | Requires manual implementation | Automatic |

Why Use OpenAISchema with Pydantic?

The combination of OpenAI Function Calls and Pydantic enables developers to focus on defining clean and reusable schemas while letting Pydantic handle the heavy lifting of validation and parsing. It’s particularly useful in production systems where data quality and schema adherence are critical.

Lessons learnt/Challenges faced so far

- Docstring matters (Refer 3-Function-calling-variations.ipynb)

- Struggles with Nested APIs or Function Calls (Refer 3-Function-calling-variations.ipynb)

- Similar observation when it needs to make Nested Function calls by handling json values (Refer 5-Structured-Extraction.ipynb) L4-tagging-and-extraction.ipynb

- We can automate this using

convert_pydantic_to_openai_function(Refer L3-function-calling.ipynb, and more) in DeepLearning.AI - Functions, Tools and Agents with LangChain