- Word2Vec: One of the most popular and earlier example of word embeddings.

- Word2Vec trained neural network architecture to generate word embeddings by predicting the context of a word given the target word or vice versa.

SUMMARY

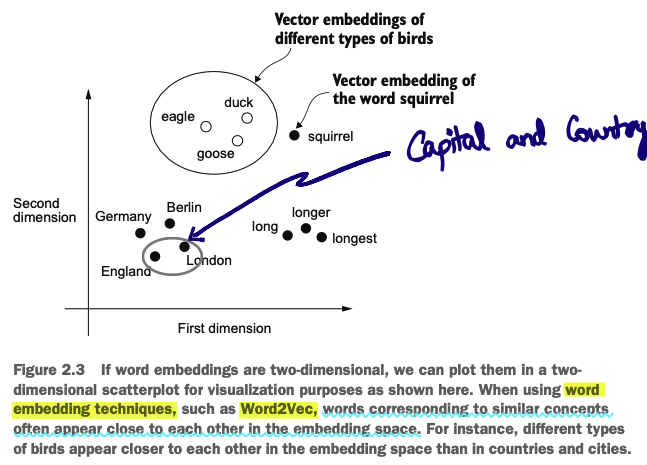

The main idea behind Word2Vec is that words that appear in similar contexts tend to have similar meanings. Consequently, when projected into two-dimensional word embeddings for visualization purposes, similar terms are clustered together.

TODO

- Understand how Word2Vec is designed and trained