Day 0: Trouble shooting and FAQs

- Kaggle notebook

- API key pay in AI Studio

- Sign up for a Discord account and join us on the Kaggle Discord server.

We have the following channels dedicated to this event:#5dgai-announcements: find official course announcements and livestream recordings.#5dgai-introductions: introduce yourself and meet other participants from - around the world.#5dgai-question-forum: Discord forum-style channel for asking questions and discussions about the assignments.#5dgai-general-chat: a general channel to discuss course materials and network with other participants.

Day 1: Foundational Large Language Models & Text Generation and Prompt Engineering

- 📝 My Kaggle Notebooks

- 🎒Assignment

- Complete the Intro Unit – “Foundational Large Language Models & Text Generation”:

- Listen to the summary podcast episode for this unit.

- To complement the podcast, read the “Foundational Large Language Models & Text Generation” whitepaper.

- Complete Unit 1 – “Prompt Engineering”:

- Listen to the summary podcast episode for this unit.

- To complement the podcast, read the “Prompt Engineering” whitepaper.

- Complete these codelabs on Kaggle:

- Make sure you phone verify your Kaggle account before starting, it’s necessary for the codelabs.

- Want to have an interactive conversation? Try adding the whitepapers to NotebookLM.

- Complete the Intro Unit – “Foundational Large Language Models & Text Generation”:

- 💡What You’ll Learn

- Today you’ll explore the evolution of LLMs, from transformers to techniques like fine-tuning and inference acceleration. You’ll also get trained in the art of prompt engineering for optimal LLM interaction.

- The code lab will walk you through getting started with the Gemini API and cover several prompt techniques and how different parameters impact the prompts.

Summary of the key points & callouts

Day 1 - Prompting [TP]

- The examples were built leveraging Gemini 2.0 and the latest

google-genaiSDK - Gemini Models covered are

gemini-2.0-flashgemini-2.0-flash-thinking-exp

Thinking model: Trained to generate the "thinking process" the model goes through as part of it's response. This provides us with high quality responses without needing specialised prompting like CoT or ReAct.

- Two types of content generation covered are

client.models.generate_content- Single-turn text-in/text out structure

client.models.generate_content_stream- Instead of waiting for the entire response, the model sends back chunks or parts of the generated content as they become available as an iterable.

- `client.chats.create

- Multi-turn chat structure including access to chat history.

- Configs covered are

temperaturetop_pmax_output_tokens

Specifying this parameter does not influence the generation of the output tokens, so the output will not become more stylistically or textually succinct, but it will stop generating tokens once the specified length is reached.

- Prompting techniques covered are

- Zero-shot Prompting

- One-shot Prompting

- Few-shot Prompting

- Chain of Thought (CoT)

- Reason only

- ReAct: Reason and Act

- Thought > Acton > Observation > …

- Techniques to enforce the LLM output to follow the supplied schema

enummodejsonmode- Can be achieved using

typingTypedDictordataclass

- Can be achieved using

- Code prompting scenarios covered are

- Generating code

- Code execution

- Can automatically run generated code using

ToolCodeExecution

- Can automatically run generated code using

- Explaining code

Day 1 - Evaluation and structured output [TP]

- Gemini Models covered are

gemini-2.0-flash

- Concepts covered include

- Summarising a (pdf) document

- Evaluating question answering quality

- Gauge the quality of the LLM generated summary for the user question on a document.

- Criteria used are

- Instruction following

- Groundedness

- Completeness

- Conciseness

- Fluency

- Two popular model-based metrics covered are:

-

- Pointwise evaluation: Evaluate a single I/O pair against some criteria (e.g., 5 levels of grading)

-

- Pairwise evaluation: Compare two outputs against each other and pick the better one (e.g., Compare against a baseline model response).

-

- Caching & Memoization

Day 2: Embeddings & Vector

- 📝 My Kaggle Notebooks

- 🎒Assignment

- Complete Unit 2: “Embeddings and Vector Stores/Databases”, which is:

- [Optional] Listen to the summary podcast episode for this unit (created by NotebookLM).

- Read the “Embeddings and Vector Stores/Databases” whitepaper.

- Complete these code labs on Kaggle:

- Complete Unit 2: “Embeddings and Vector Stores/Databases”, which is:

- 💡What You’ll Learn

- Today you will learn about the conceptual underpinning of embeddings and vector databases and how they can be used to bring live or specialist data into your LLM application. You’ll also explore their geometrical powers for classifying and comparing textual data.

Summary of the key points & callouts

Day 2 - Document Q&A with RAG [TP]

- Gemini Models covered include

text-embedding-004

- Two embedding task types (official documentation) covered are

retrieval_document- Specifies the given text is a document from the corpus being searched.retrieval_query- Specifies the given text is a query in a search/retrieval setting.

- ChromaDB methods (official documentation) covered are

db.add(...)db.count()db.peek(...)db.query(...)

- RAG Concepts covered

- 0. Creating embeddings

-

- Retrieval: Finding relevant documents

-

- & 3. Augmented Generation: Answer the questions

Day 2 - Embeddings and similarity scores [TP]

- Gemini Models covered include

text-embedding-004

- Embedding task types (official documentation) covered are

semantic_similarity

- Concepts covered include

- Similarity score using cosine similarity (or dot product). More details here

Day 2 - Classifying embeddings with Keras [TP]

- Gemini Models covered include

text-embedding-004

- Embedding task types (official documentation) covered are

classification

- Concepts covered include

- Building a simple classification model with 1 hidden layer (and ~0.6M trainable params) using

keras.Sequential

- Building a simple classification model with 1 hidden layer (and ~0.6M trainable params) using

Day 3: AI Agents

- 📝 My Kaggle Notebooks

- 🎒Assignment

- Complete Unit 3: “Generative AI Agents”, which is:

- [Optional] Listen to the summary podcast episode for this unit (created by NotebookLM).

- Read the “Generative AI Agents” whitepaper.

- Complete these code labs on Kaggle:

- Complete Unit 3: “Generative AI Agents”, which is:

- 💡What You’ll Learn

- Learn to build sophisticated AI agents by understanding their core components and the iterative development process.

- The code labs cover how to connect LLMs to existing systems and to the real world. Learn about function calling by giving SQL tools to a chatbot, and learn how to build a LangGraph agent that takes orders in a café.

Summary of the key points & callouts

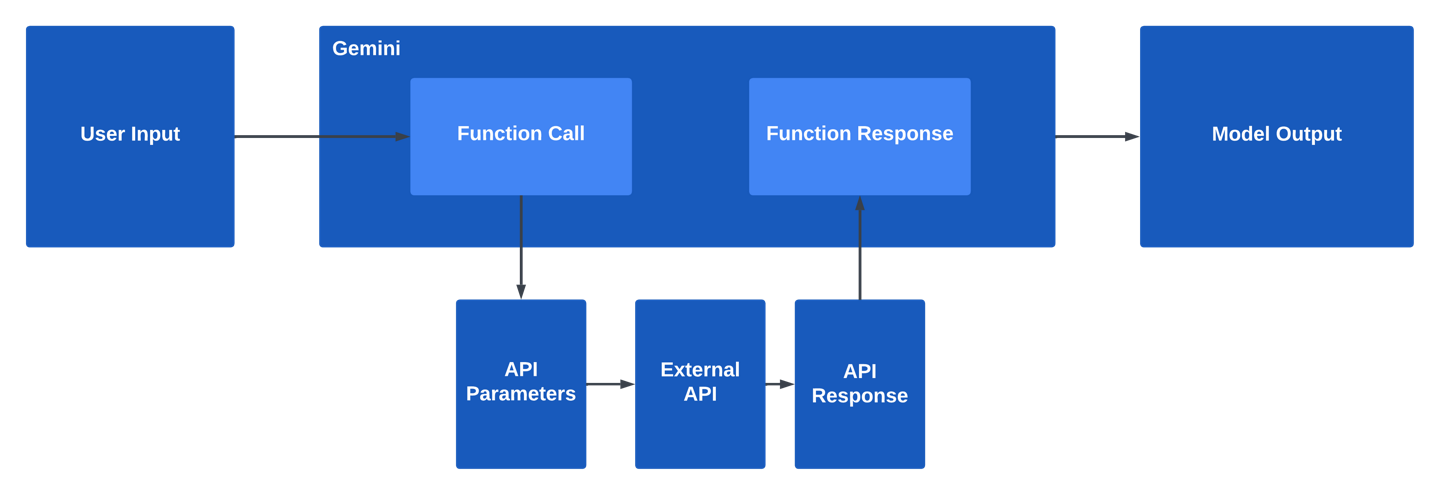

Day 3 - Function calling with the Gemini AP [TP]

- Gemini models covered include

gemini-2.0-flashgemini-2.0-flash-exp

- Concepts covered include

- Function calling (leveraging openAPI schema) with tools

- Three important parts that can be observed in chat history are

-

text, 2)function_call, 3)function_response

-

- Three important parts that can be observed in chat history are

- Compositional function calling

- Function calling (leveraging openAPI schema) with tools

Need to revisit "Compositional function calling" concept as the code was not working completely as expected

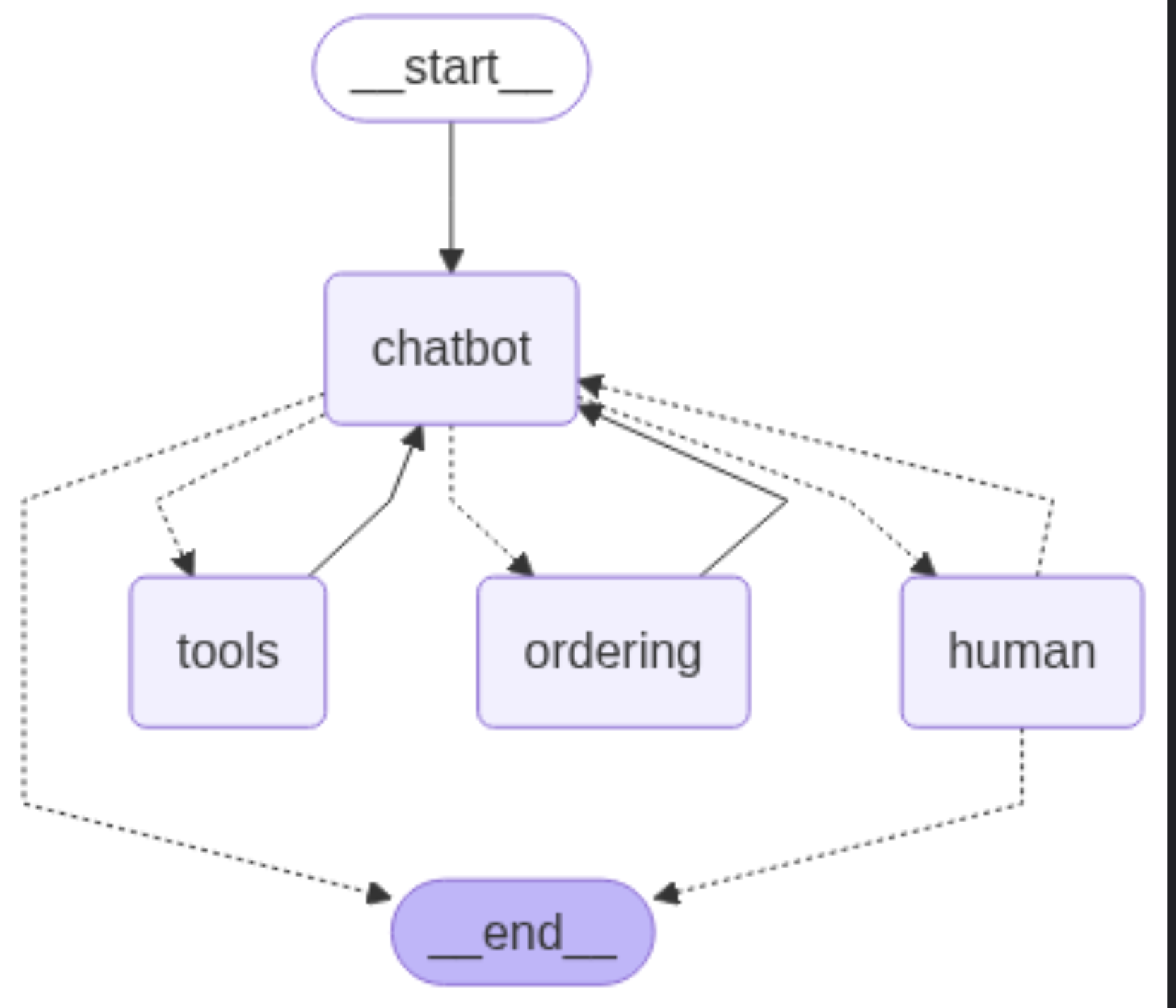

Day 3 - Building an agent with LangGraph [TP]

- Gemini models covered include

gemini-2.0-flash

- Concepts covered include

- LangGraph

- graph structure

- state schema

- node (action or a step)

- edge (transition between states)

- conditional edge

- tools

- stateless tools

- stateful tools

- LangGraph

Model should not directly have access to the apps internal state, or it risks being manipulated arbitrarily. Rather, we provide tools that update the state.

Day 4: Domain-Specific Models

- 📝 My Kaggle Notebooks

- 🎒Assignment

- Complete Unit 4: “Domain-Specific LLMs”, which is:

- [Optional] Listen to the summary podcast episode for this unit (created by NotebookLM).

- Read the “Solving Domain-Specific Problems Using LLMs” whitepaper.

- Complete these code labs on Kaggle:

- Complete Unit 4: “Domain-Specific LLMs”, which is:

- 💡What You’ll Learn

- In today’s reading, you’ll delve into the creation and application of specialized LLMs like SecLM and MedLM/Med-PaLM, with insights from the researchers who built them.

- In the code labs you will learn how to add real world data to a model beyond its knowledge cut-off by grounding with Google Search. You will also learn how to fine-tune a custom Gemini model using your own labeled data to solve custom tasks.

Summary of the key points & callouts

Day 4 - Google Search grounding [TP]

- Gemini models covered include

gemini-2.0-flash

- Concepts covered include

- Search Grounding using

GoogleSearchgrounding_metadata- Includes link to search suggestions, supporting docs, and info on how they were usedgrounding_chunks- Contains source URIgrounding_supports- For each output text chunk, contains the index of the grounding chunk and the corresponding confidence score (start_index,stop_index).

- Search with Tools

- GoogleSearch - Google Search Grounding Tool

ToolCodeExecution- Code generation & execution tool

- Search Grounding using

Day 4 - Fine tuning a custom model [TP]

- Gemini models covered include

gemini-1.5-flash-001gemini-1.5-flash-001-tuning- This is deprecated now

- Concepts covered include

- Fine-tuning a custom model

- Tuned model requires no prompting or system instructions and outputs succinct text from the classes we provide in the training data.

- Thereby, saving total tokens require,

- Fine-tuning a custom model

Day 5: MLOps for Generative AI

- 📝 My Kaggle Notebooks

- …

- 🎒Assignment

- Complete Unit 5 - “MLOps for Generative AI”:

- Listen to the summary podcast episode for this unit.

- To complement the podcast, read the “MLOps for Generative AI” whitepaper.

- No codelab for today! During the livestream tomorrow, we will do a code walkthrough and live demo of goo.gle/agent-starter-pack, a resource created for making MLOps for Gen AI easier and accelerating the path to production. Please go through the repository in advance.

- Want to have an interactive conversation? Try adding the whitepaper to NotebookLM.

- Complete Unit 5 - “MLOps for Generative AI”:

- 💡What You’ll Learn

- Discover how to adapt MLOps practices for Generative AI and leverage Vertex AI’s tools for foundation models and generative AI applications such as AgentOps for agentic applications.

Miscellaneous

- Gemini comparison

- Evaluation

- Google documentations:

To do or clarify

- Day 1: Foundational Large Language Models & Text Generation and Prompt Engineering

- Temperature vs. Top P vs. Top K:

- Day 2:

- What would happen if we try to embed a text that has 5000 words using

/text-embedding-004gemini model which only support upto 2048 input tokens? - https://github.com/google-gemini/cookbook/blob/main/examples/Search_reranking_using_embeddings.ipynb

- https://github.com/google-gemini/cookbook/blob/main/examples/Anomaly_detection_with_embeddings.ipynb

- What would happen if we try to embed a text that has 5000 words using

- Day 3:

- I don’t understand the “Compositional function calling” section properly.

- If time permits, try out Further exercises in Google-5-Day-Gen-AI-Intensive-Course > Day 3 - Building an agent with LangGraph

- Day 4

- The code execution using

ToolCodeExecutionis not working in Day 4 - Google Search grounding [TP] - Fine-tuning is not working in Day 4 - Fine tuning a custom model [TP]. So, try this solution: Tune Gemini models by using supervised fine-tuning, When to use supervised fine-tuning for Gemini, and Gemma model fine-tuning

- The code execution using

- Day 5

- The Agent Starter Pack was not working fully due to Vertex and Google Cloud Authentication/Setup issue. Will try it out during spare time as it seems quite interesting and useful for end-to-end agent development and deployment.